Organising the Archive

-

Lia, are you a borg?

love the lost ark warehouse btw -

@b0ardski Might make things easier if I was. Still wrapping me head around how I'll get a script to interpret and pull what I want. Might be a few days of trial and error with lots of googling (as usual).

Glad you noticed, first thing that came to mind when thinking of an endless warehouse :)

-

i recommend using jupyter notebooks to start. can really quickly test ur code. if ur using javascript, here's something else u might be interested in.

-

@notsure Might try python with the first option. I hear it's good for beginners.

Will save me butchering whatever language is used to write batch scripts that I used to do for one off tasks. -

@lia javascript

-

-

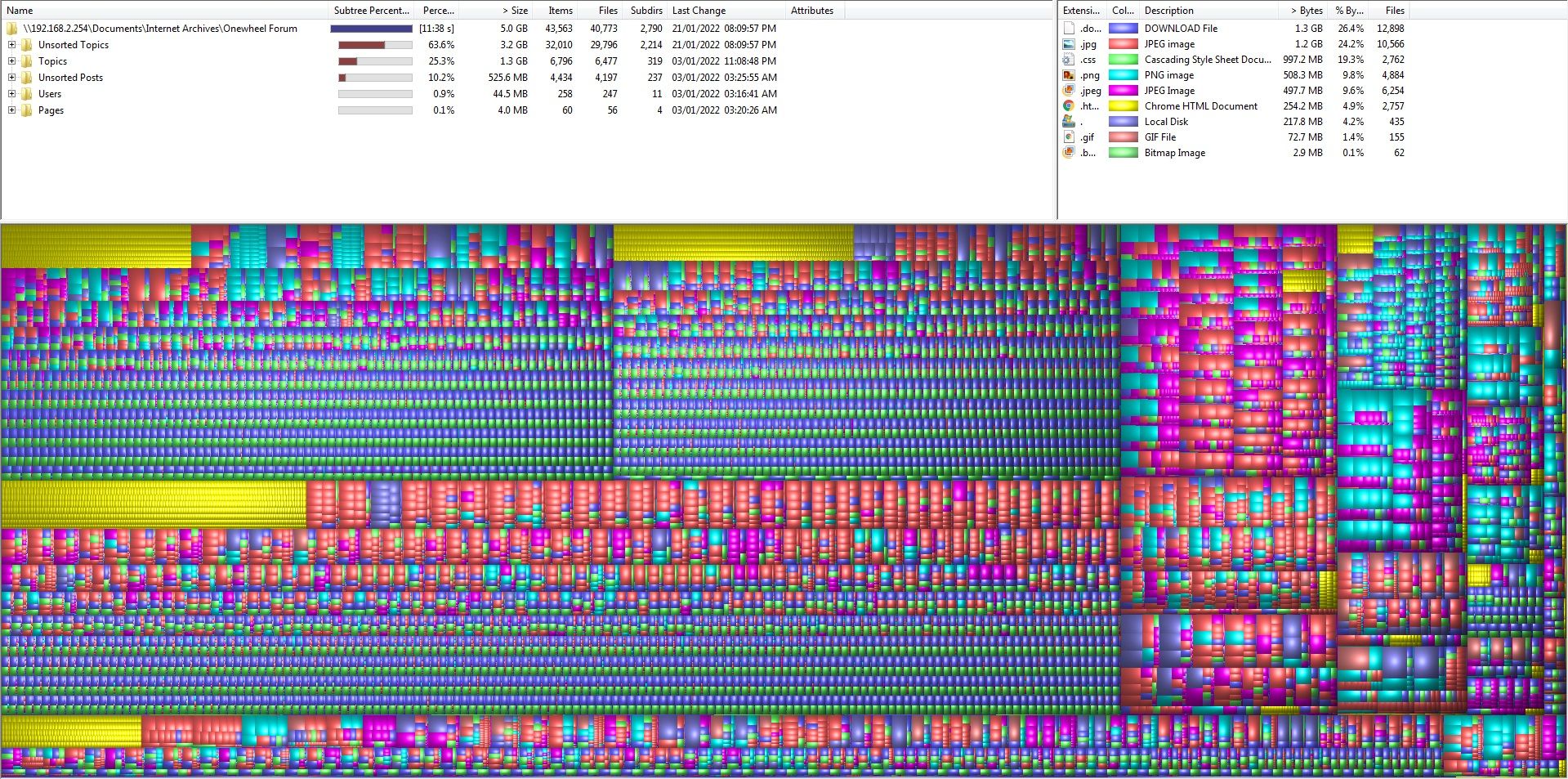

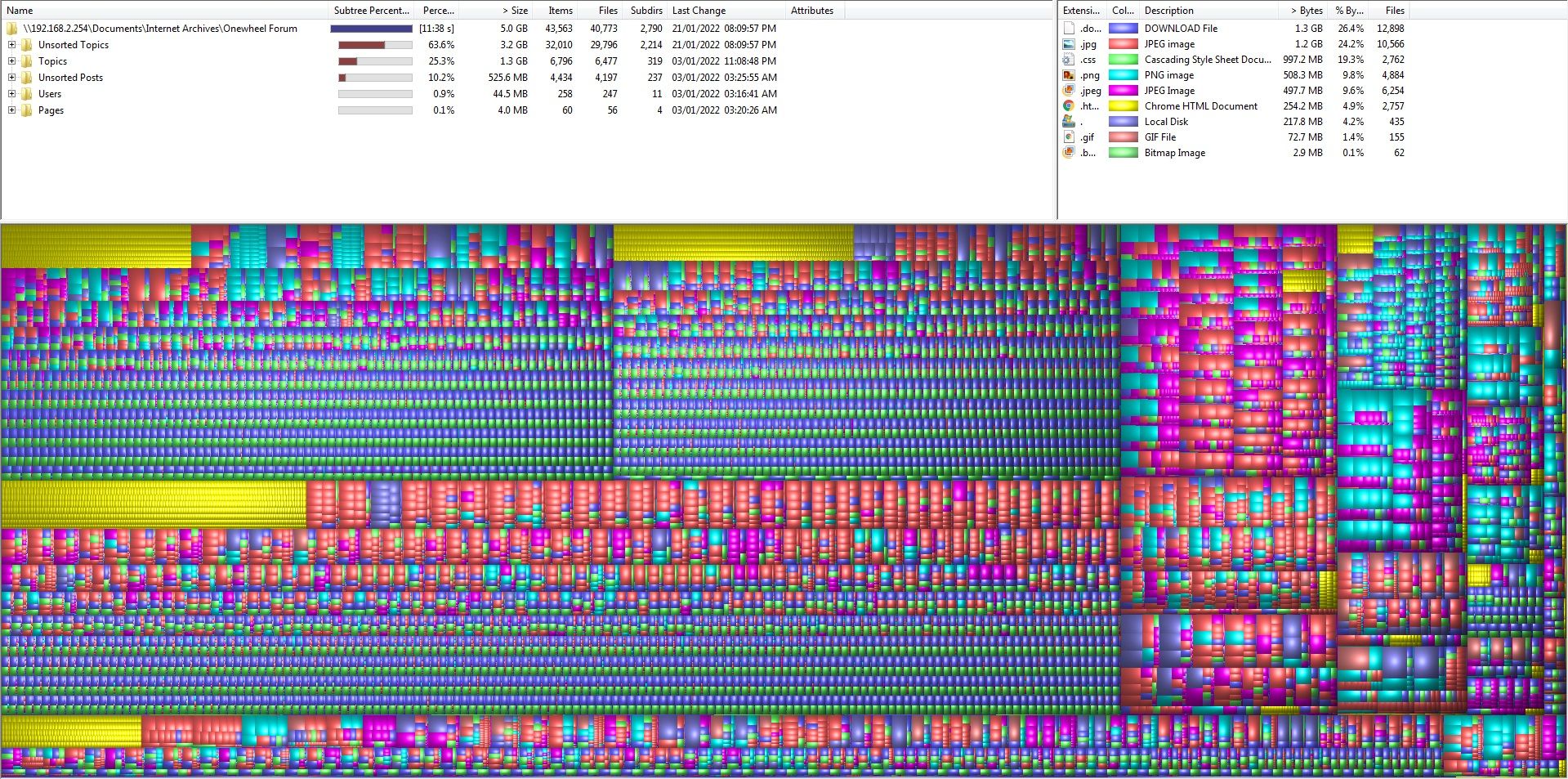

9600 topics manually googled with the cached results downloaded if they came up.

Next up... making a master template to begin merging the pages together.After that I'll host it on the site and setup a way for people to send me missing posts/pages for me to add later. A lot can be found from archive.org but I got the time sensitive stuff for now.

-

@lia said in Organising the Archive:

9600 topics manually googled with the cached results downloaded if they came up.

"um, what exactly do u mean 'bad disk sector'?"

-

Just a little update.

I have manually stitched together the "Reviving a destroyed Pint" thread and looking into a means of hosting it before working through the rest of the threads and creating placeholder elements to occupy posts withing threads that have yet to be found and merged.

Since the pages used to rely on javascript and some other nodebb side dependencies I'm considering hosting the archive on a separate server then routing a subdomain to it, like archive.owforum.co.uk in order to keep both systems isolated.

(edit: corrected the example to an actual subdomain, former example was a subdirectory which takes way more effort to dump on a separate server)Main reason being security. I don't know what holes might get opened by just hosting ripped webpages but I imagine there could be a few so if I keep them separate then at worst the archive gets taken out for a few days before I initiate a backup. That then leaves the new forum safe from that. It also means I'll be able to tweak the archive at any point without accidentally nuking the forum.

-

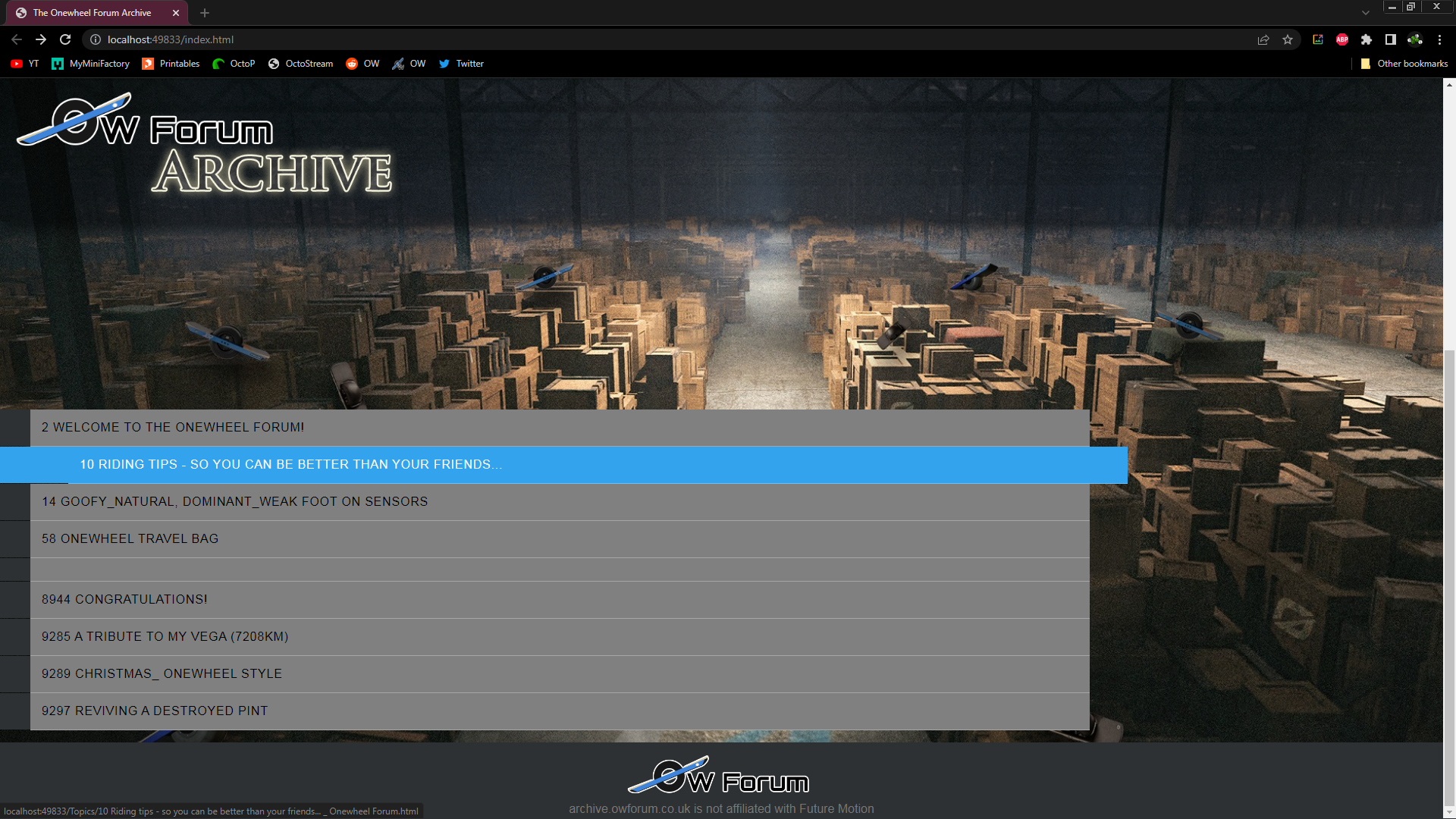

I am not joking when I say I spent ALL of today trying to make the landing page for the archive ready to make it live with some preliminary pages...

I hate code! (because I'm bad at it) I was going to use some online tools but my caffeine addled brain thought it was up to the challenge. So many tutorials that don't wooooooork adsfgsbglgdg

H O W E V E R...

It pwetty~

Backdrop isn't staying (maybe), I'd like to get something else but for now it's a fun placeholder.

Haven't cleaned this page up yet, considering replacing all the headers with the archive logo that will act as a means to return to the landing page.

Still plenty more to do to make it usable before I even let it loose on the internet. The actual old pages need a lot of manual tinkering to make them presentable since google butchered them when caching. archive.org didn't do too well either on some but I've figured out what's mostly broken to fix them up.

Big shoutout to @cheppy44 for giving me a boost in motivation which I funnelled into this.

Now I have to get over the prospect of manually reparing the files I got.

All... of them... oh no...

Gonna need more monster. RIP diet.

-

@lia what do you have to do to repair the files?

-

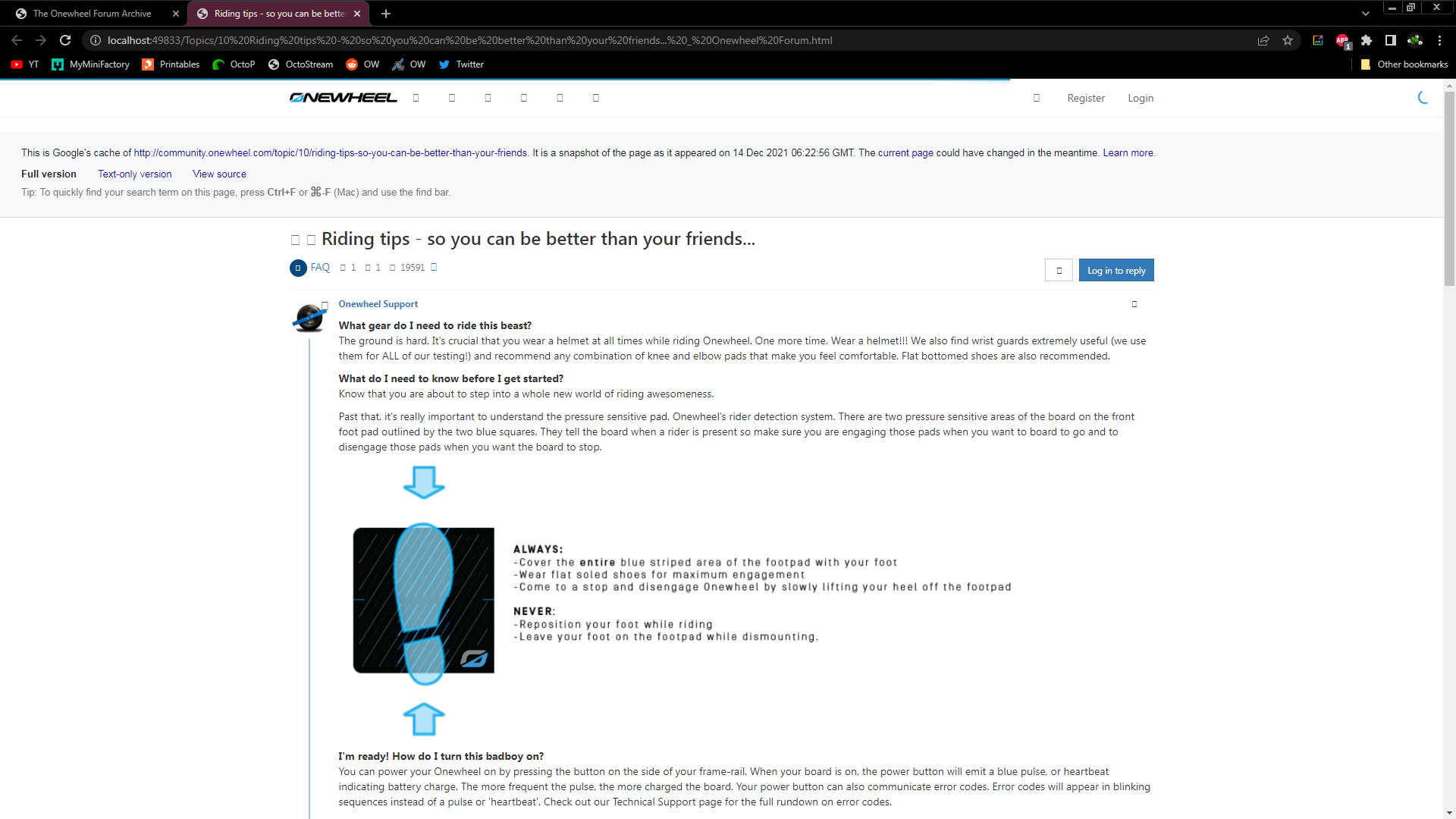

@cheppy44 Depends on the topic, there are 2 issues plaguing some of the topics I got.

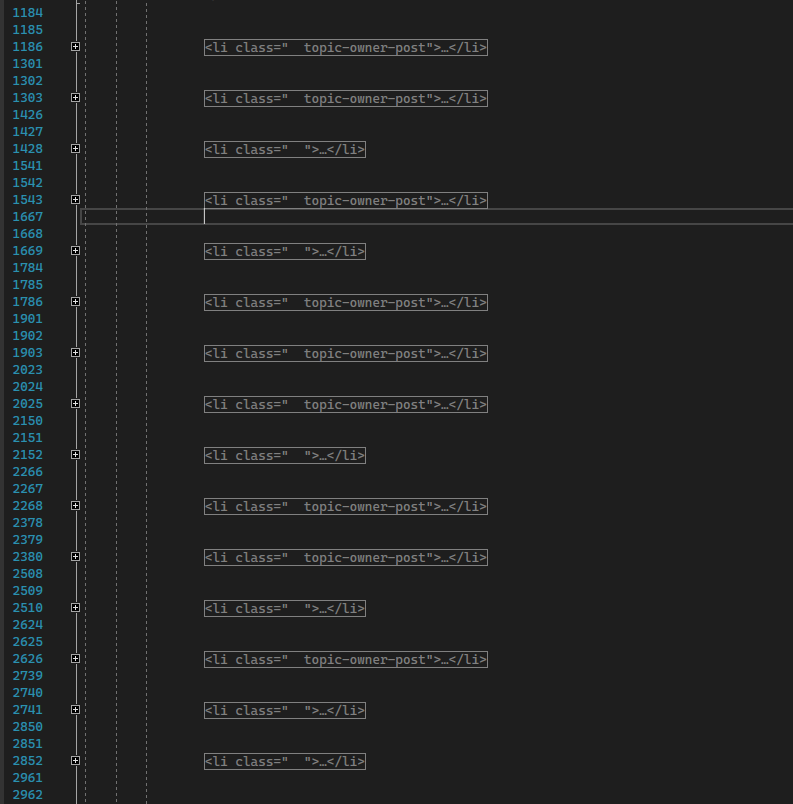

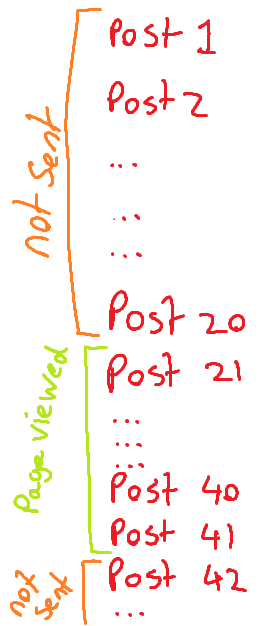

First one is all the larger topics are broken into smaller captures. The old forum (and this one since it's the same software) had scripts to only load 20 posts per view that would call to unload and load depending on which way you're scrolling. This meant all the web crawlers would pickup chunks of the forum depending on what post they landed on based on the post ID in the URL.

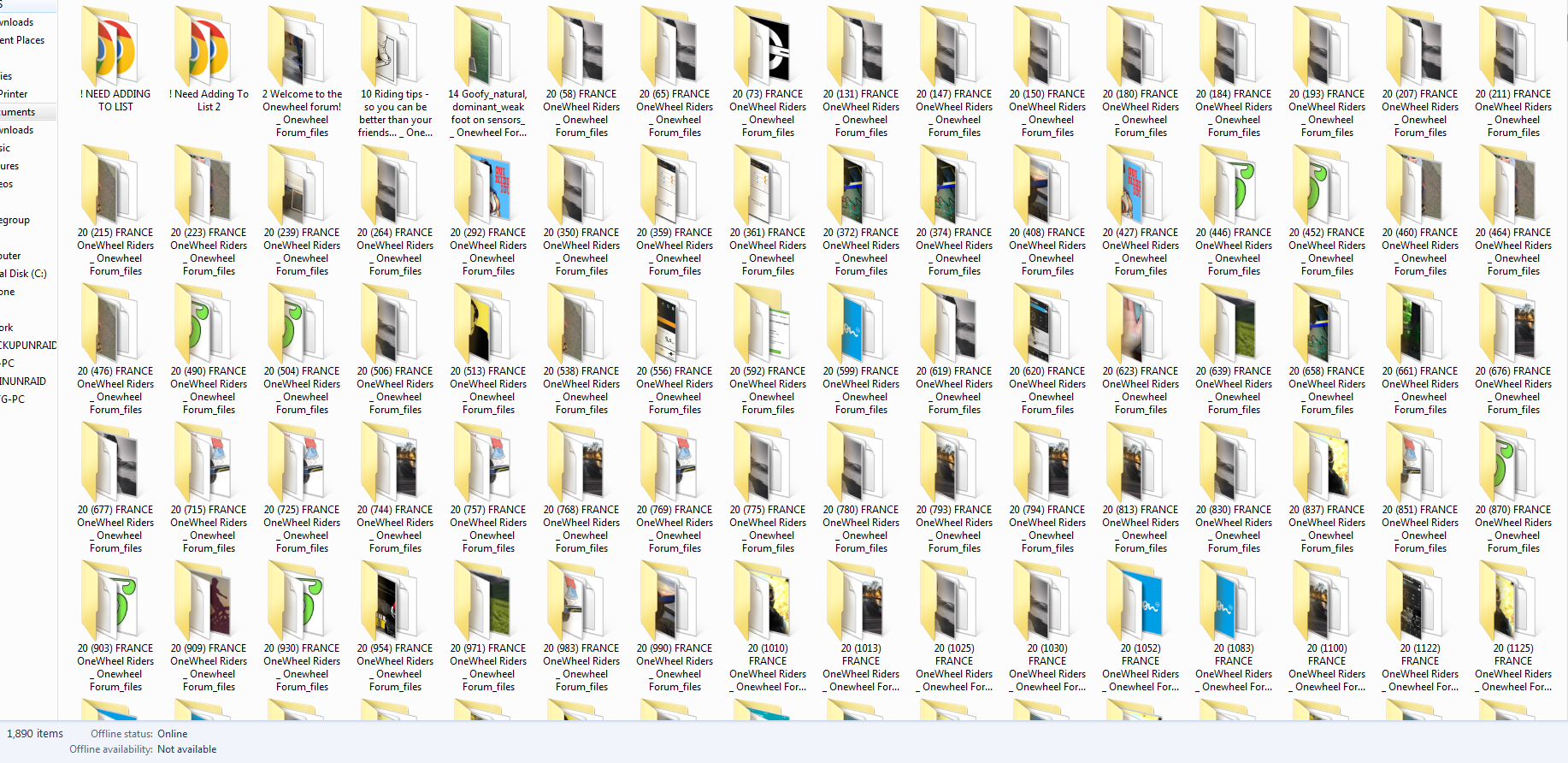

For instance an early topic (#20) was the France riders that had over 2000 posts. As such I've got various iterations of the topic that occupy different chunks of it.

I downloaded the pages, prefixed the name with the topic ID then Post ID in the brackets if it wasn't the first page.

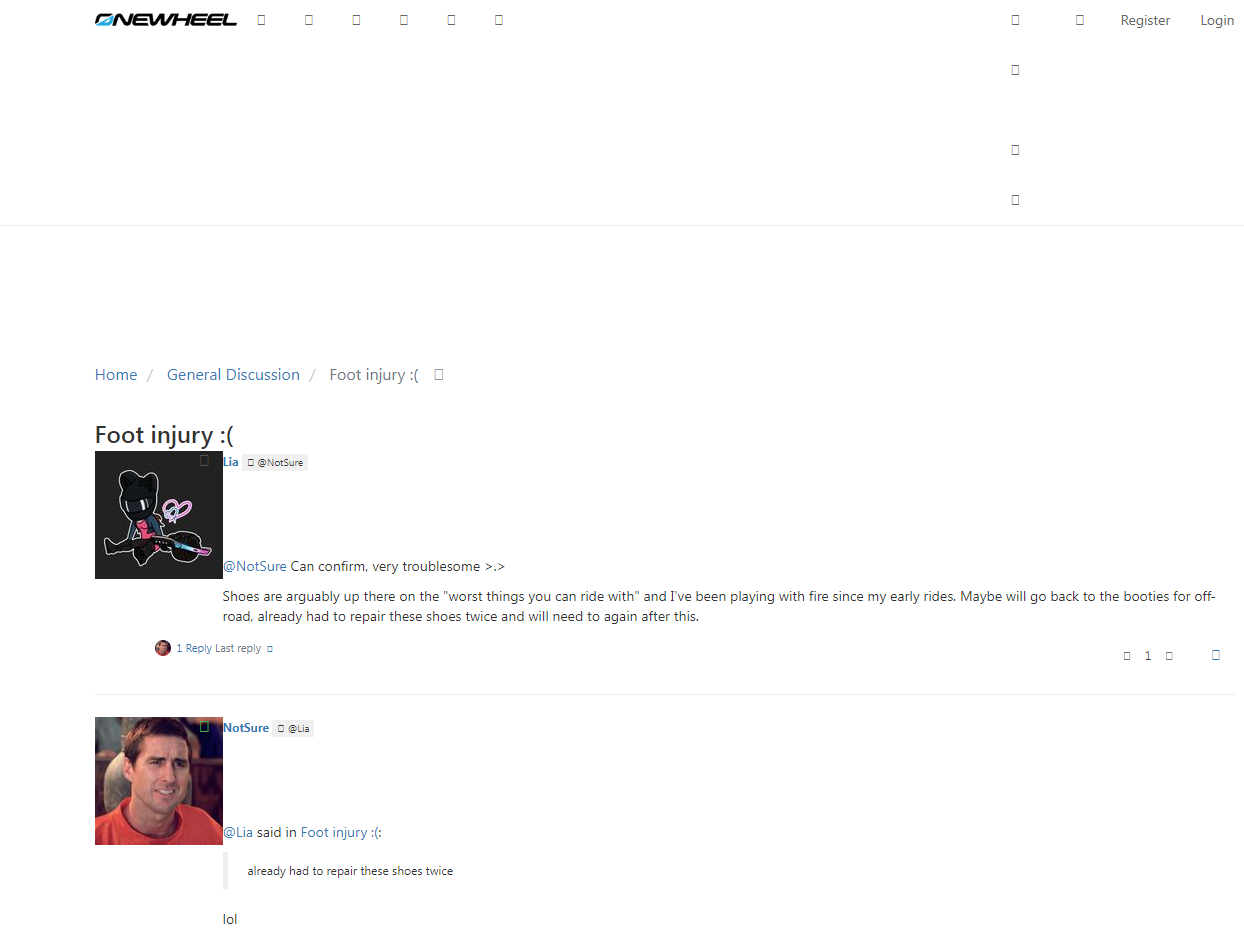

Second issue is sometimes the webcrawler didn't grab a working version of the site so elements end up really scrambled like below.

Icons and header is utterly borked compared to what you see on this working forum.I'm sure I'll find a streamlined way to do it eventually. Did want to make a python script but looking at the scope of it I realised the tool would have to be super complex to account for the variety of edge cases on the thousands of files. In the meantime I'll focus on the more popular and sort after topics.

-

@lia I wish you the best of luck! if there is somehow to help I am willing to put some time into it too :)

-

@cheppy44 Thanks :)

I think once I get a functional site up for it I might ask if anyone more competent can look over the pages (I'll make the html, css and scripts available) to see if there are better ways to achieve the desired function. -

@lia said in Organising the Archive:

Did want to make a python script but looking at the scope of it I realized the tool would have to be super complex to account for the variety of edge cases on the thousands of files. In the meantime I'll focus on the more popular and sort after topics.

This could either be super easy or super difficult to hem together the individual segments into a complete thread. It will depend upon how the server implements the tagging mechanism when generating each page.

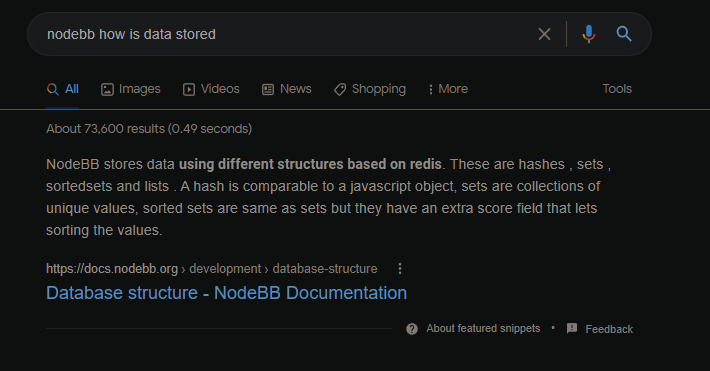

that is where to start ur planning imo. how does the server store data and generate a page from that data.

then you just need to merge the properly cleansed data to a copy of the existing data set for testing. if it works, ur good.

Cleansing this much loosely structured data would certainly be a hellish chore manually, and even still as a scripted application.

Firstly, I'm a data analyst, not a web developer, so others may have better opinions. if i were to approach the problem, it would start with data cleansing.

Each data set would be programmatically cleansed of irrelevant data, leaving a simple html segment with the desired components.

the css for each html can be treated in two ways, either left alone and pray for no bugs, or tossed all together and redone. The html is what matters and can probably be stylized afterwards.

I would prefer the former solution cuz its easier, but i also find myself doing both in the end cuz u know... the universe.

u may not have to process tags any further since each page was likely generated procedurally, so they may be identically tagged.

my real hope would be for more or less plug and play css.

thats why i don't do front-end at all.

users r so nitpicky! just eat this wall of text u ape!

-

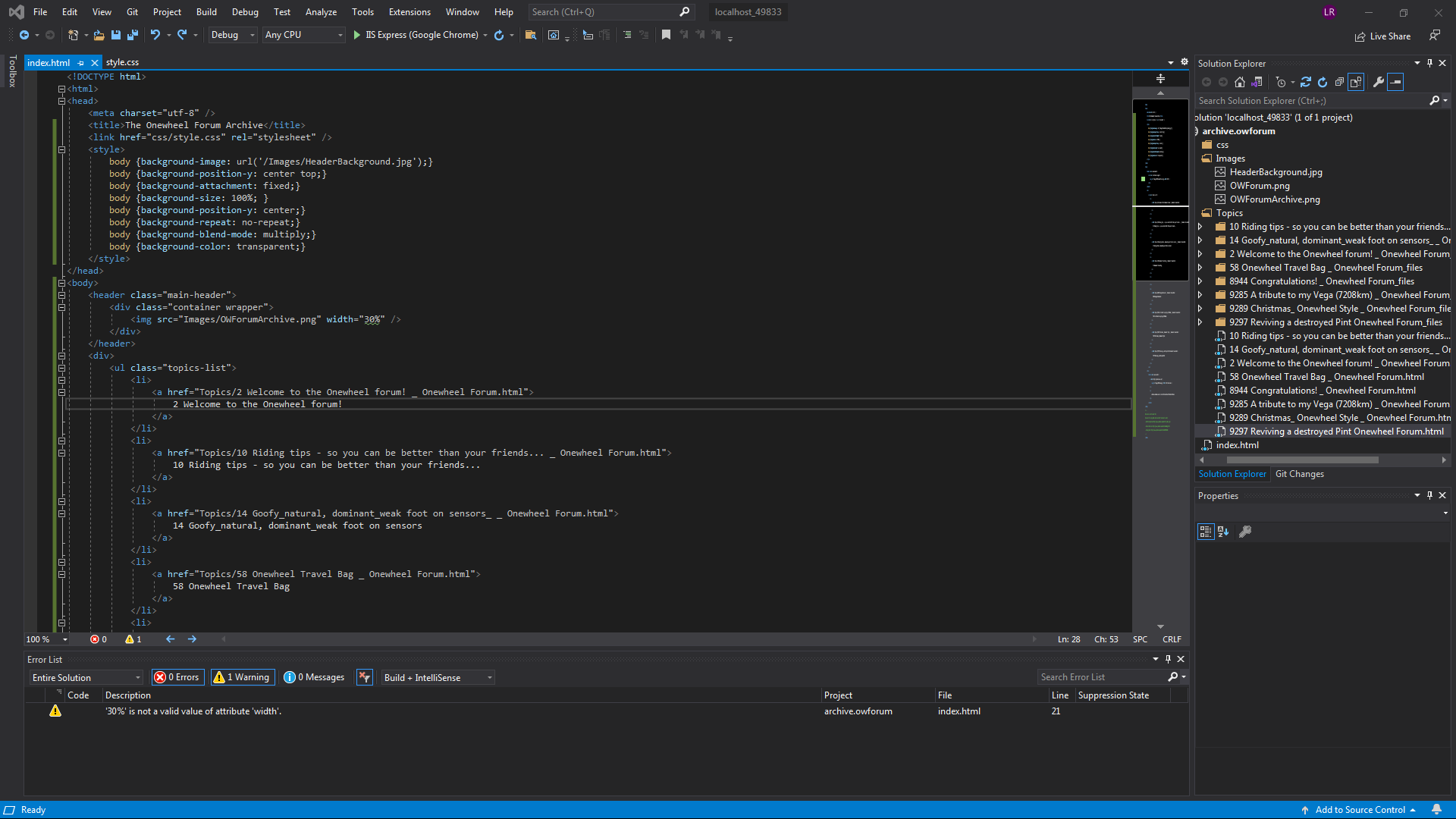

@notsure Thanks for the input :) I personally don't think I'll write a script for this and hand repair them since it's well out of my toolset. That said I may later take a swing at it now that I'm sort of getting to grips with Visual Studio.

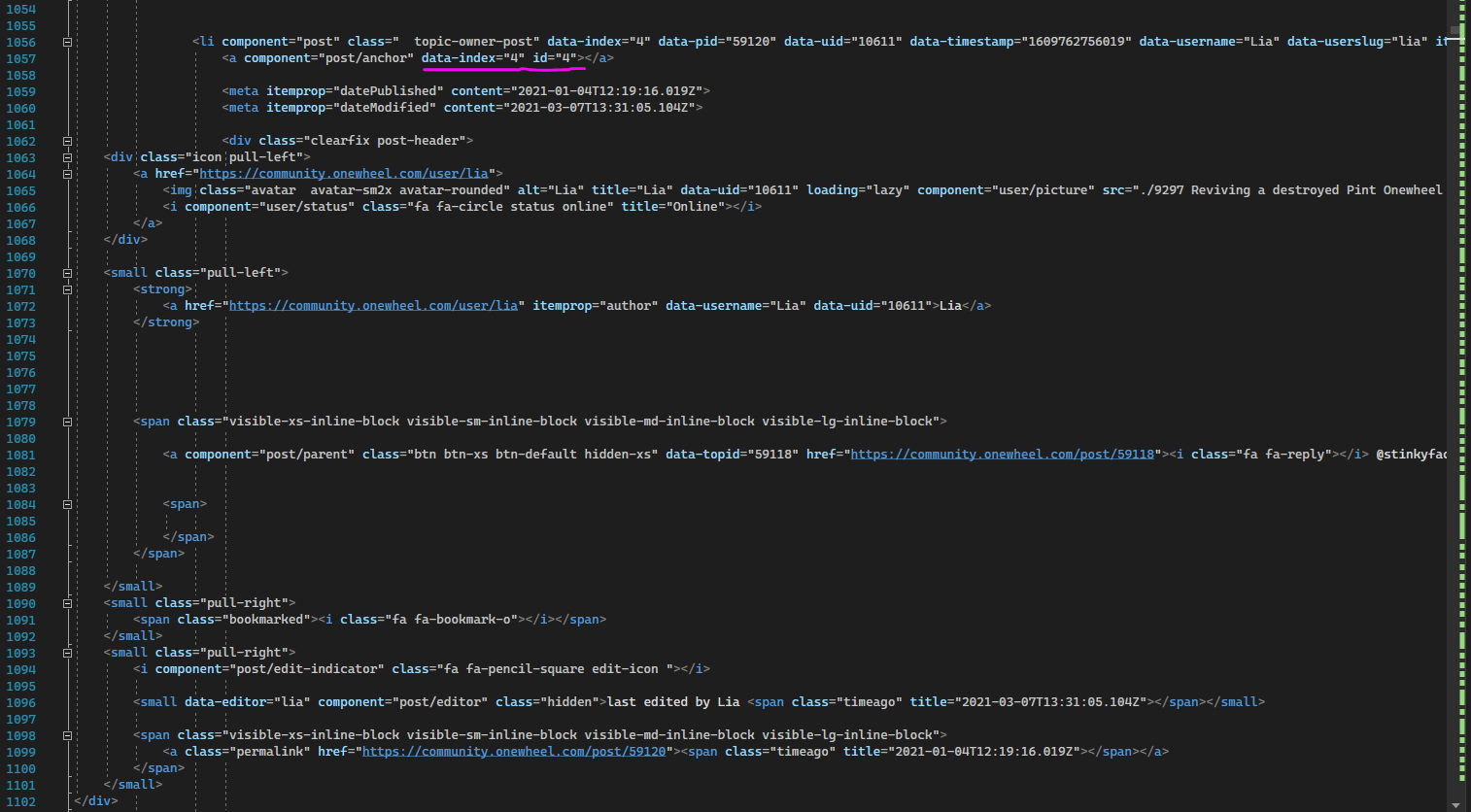

For the one that I've merged myself the individual posts are contained within a list tag "<li>...</li>" each.

The post ID is a bit buried though as "data-index".

I imagine the script would have to look through a page for "data-index=" then expand it's selection between the previous <li> tag and the closing </li> tag. However it would need to lookout for additional list tags within the document which complicates matters.

I found it easier to close all tags then copy the 20 or so blocks into the "master" document.

For the France topic and a few others I might separate some into pages since they're far too big to not destroy someones browser.

@notsure said in Organising the Archive:

users r so nitpicky! just eat this wall of text u ape!

My eyes snapped to this when I saw the wall. I am at heart illiterate ;)

-

@lia said in Organising the Archive:

For the one that I've merged myself

nodebb uses a redis distribution for its database. that means ur not done learning perusing doc notes yet lol... redis is a database. it's how nodebb stores its data. u learn redis, u learn nodebb.

-

@lia I really like the background, I think you should keep it.

If I understand you, the pages you have (e.g. France riders) are each a subset of comments/replies (up to 20) and you need to stitch it back together into a single strand using overlapping context. If so, this is actually very similar to how DNA sequencers work (just a fun aside).

If that is what you need, and you were able to share the files you have, I should be able to pretty easily reconstruct a single file sequence. If you had a google drive or something and uploaded an example like the France riders one as a zip or something, I should be able to write a relatively straightforward script to stitch it all back together and upload back a single HTML file.

If that worked, we could figure out a process to run through the entire archive. In the process, I could also make any HTML header/footer/etc. alterations you would want.

-

@biell she's concerned about embedded bugs in the source. i would be too considering she'd be hosting it without knowledge of how to maintain it. if she could iterate thru that html, pull proxy user ids n store the relevant data, she could simply lock those ids n reduce maintenance. in my humble opinion. what do u think?

-

@notsure That bit's fine, since I'm not rebuilding the site but just fixing the HTML output that it spits out since that's what I have saved. As such I'll try to strip out any calls for server dependencies so it's not calling to a site that doesn't exist.

I did consider building a replica nodebb install then stripping and entering data into the database to replicate the old server but that sounded way too involved and didn't feel right.

@biell Thanks :)

It's a render someone did of the scene from Raiders Of The Lost Arc and I just added some OW stuff on top. If I plan to use it I'll see if I can ask the artist if they're okay with it and if they want any credit added anywhere since their signature gets lopped off at the side D:That's pretty much it. The site seemed to have a script to see what part of the page you were viewing then only send that data. Excuse the crude doodle.

I can look at putting one of them on a GDrive later on if you're up for it :) Thanks for the offer, no worry if it's too much effort though, really appreciate the support.